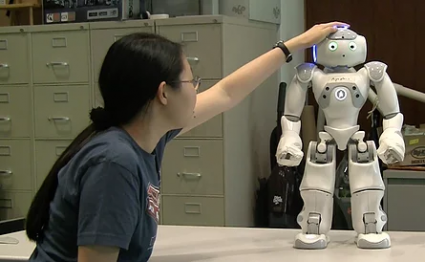

In this episode, our interviewer Lauren Klein speaks with Kim Baraka about his PhD research to enable robots to engage in social interactions, including interactions with children with Autism Spectrum Disorder. Baraka discusses how robots can plan their actions across multiple modalities when interacting with humans, and how models from psychology can inform this process. He also tells us about his passion for dance, and how dance may serve as a testbed for embodied intelligence within Human-Robot Interaction.

Kim Baraka

Kim Baraka is a postdoctoral researcher in the Socially Intelligent Machines Lab at the University of Texas at Austin, and an upcoming Assistant Professor in the Department of Computer Science at Vrije Universiteit Amsterdam, where he will be part of the Social Artificial Intelligence Group. Baraka recently graduated with a dual PhD in Robotics from Carnegie Mellon University (CMU) in Pittsburgh, USA, and the Instituto Superior Técnico (IST) in Lisbon, Portugal. At CMU, Baraka was part of the Robotics Institute and was advised by Prof. Manuela Veloso. At IST, he was part of the Group on AI for People and Society (GAIPS), and was advised by Prof. Francisco Melo.

Kim Baraka is a postdoctoral researcher in the Socially Intelligent Machines Lab at the University of Texas at Austin, and an upcoming Assistant Professor in the Department of Computer Science at Vrije Universiteit Amsterdam, where he will be part of the Social Artificial Intelligence Group. Baraka recently graduated with a dual PhD in Robotics from Carnegie Mellon University (CMU) in Pittsburgh, USA, and the Instituto Superior Técnico (IST) in Lisbon, Portugal. At CMU, Baraka was part of the Robotics Institute and was advised by Prof. Manuela Veloso. At IST, he was part of the Group on AI for People and Society (GAIPS), and was advised by Prof. Francisco Melo.

Dr. Baraka’s research focuses on computational methods that inform artificial intelligence within Human-Robot Interaction. He develops approaches for knowledge transfer between humans and robots in order to support mutual and beneficial relationships between the robot and human. Specifically, he has conducted research in assistive interactions where the robot or human helps their partner to achieve a goal, and in teaching interactions. Baraka is also a contemporary dancer, with an interest in leveraging lessons from dance to inform advances in robotics, or vice versa.

PS. If you enjoy listening to experts in robotics and asking them questions, we recommend that you check out Talking Robotics. They have a virtual seminar on Dec 11 where they will be discussing how to conduct remote research for Human-Robot Interaction; something that is very relevant to researchers working from home due to COVID-19.

Transcript

00:01 S1: Dance with Robohub, the podcast for news and views on robotics. Hello and welcome back to this week’s episode of the Robohub podcast. Today, we will explore the intersection between robotics and dance with postdoctoral researcher and soon to be assistant professor Kim Baraka, His work focuses on computational methods that inform artificial intelligence within human robot interaction. Oh, and he’s also a contemporary dancer, keen to his lessons from Dance to inform advances in robotics, Baraka spoke to or interview Lauren about his PhD research, which looked at socially assistive robotics for children with autism at the University of Texas, Austin. He also spoke about his experience as a dancer and how he thinks lessons from dance and models from Psychology can inform embodied intelligence and multi-agent interaction. Welcome to robo. Can you introduce yourself?

01:07 S2: Yeah, hi Lauren, first of all thank you for this interview, my name is Kim Baraka, I’m a PhD students at the robotics Institute at Carnegie Mellon University, and I’m also affiliated with the Technical University of Lisbon. So I’m pursuing a dual degree in robotics, and I’m focusing in my research mainly on algorithms for human robot interaction. Apart from my scientific career, I’m also a dancer. I had a classical dance training, and I’m recently more interested in improvisational dance, so I perform, teach and create work in parallel of my scientific career.

01:54 S1: Great, thank you so much. Can you tell us a little bit about what aspects of human robot interaction you’re most interested in?

02:02 S2: Yeah, definitely. So I’m merely interested in algorithms for planning, and when we talk about planning, we’re talking about a robot that is treated as a decision-making agent, so imagine a human and a robot interacting together, the robot has to constantly make decisions as to its behavior, and when I talk about behavior. My research focus is mainly on social behavior, so that means not only motion, but also communicative behavior that includes verbal behaviors, but also non-verbal behaviors such as gesturing, gazing, even maybe touch sometimes, so all of these, what could call multi-modal interactions are the types of actions that I’m thinking of when I’m thinking about robots. Actions, and so the planning of those social behaviors or actions is something that I try to model in a way that we can use in a computer science framework, so mathematical models of humans and how to adapt to different context or different situations, but also I’m specifically interested in adaptation to different types of individuals, and so just to give you an example, the focus of my thesis is on a domain which is called Socially Assistive Robotics, and more specifically, we’re working with individuals with artisan, so imagine a robot that is interacting with such individuals in the context of therapy, so the robot has to interact in a social way to train individuals with autism to have better communication and social skills, and so it is really, really key to be able to know how to best interact with different types of individuals given their different abilities, different severities of the disorder and so on and so forth, and so I’m interested mostly in models of interactions that could be useful for better adaptation of robot behavior and looking at how these models that are usually taken from outside of Computer Science or Robotics

04:17 S2: So I’m looking at models of interaction that comes from psychology or standardized tools that are developed for therapy and trying to see how these models could be useful to bootstrap the efficiency of an interaction and more specifically a social interaction. So.

04:37 S1: This kind of a broad overview of my thesis My PhD thesis research.

[04:44] [Lauren] Great, so you mentioned that you have to tailor these models to different types of individuals, how do you understand or how does a robot understand what type of individual is interacting with?04:58 S2: [Kim] So depending on the context, we usually identify a set of features that will characterize the individuals you’re interacting with, so in the case of autism therapy, the features that matter in an interaction are usually features that code the response to some social behaviors or the initiation of some social situations or actions, and so if we have an understanding, for example, if you look at diagnosis information, this diagnosis information in the case of autism is information that implicitly codes how a certain individual would respond to certain situations or certain prompts that are typically used in Social interaction, so to give you an example, this could be something about how the person uses gaze to initiate modulating interaction, their use of gestures such as pointing, how they respond to things like joint attention, so when they understand gestures when they understand facial expressions, and if we have that information, that is really something that is useful for robots to model the possible responses to what the robot would do, so if they have this understanding, they could better plan ahead and adapt to ensure a more efficient or more fluid interaction. So different varieties of autism are usually associated with.

06:36 S2: Different requirements in terms of how much stimulation, you should give So if you have a child that has struggle responding to gestures or something called Response to joint attention, wouldn’t understand particularly well what pointing gesture means, you might wanna really exaggerate the way you point or the way gaze, or you might want to activate the target that you want them to look at in order for them to be able to respond effectively to those stimuli. But on the other hand, if you have an individual that is good at joint attention, or that responds, well, through this type of stimulate, you may not need to go all the way, you might just need a gaze shift, and so the robot could save some energy. Even challenge the child to become better at detecting those more subtle behaviors, and that’s really useful in therapy ’cause we want to always find the balance between being overly explicit in the robots actions versus being too subtle and at the risk of not having a satisfactory response from the child’s perspective, ’cause I’m working mainly with children, of course. Yeah, so that’s mainly my response to it To your question.

08:10 S1: [Lauren] Great, I would imagine that it takes some time for the robot to learn and understand these features about the human, can you describe what the timeline looks like for how long the robot spends exploring the capabilities of the child versus when it decides how to employ certain techniques or is this kind of a repeated measure that if you have an interaction, it’s learning and then exploiting learning and then exploiting, or is it completely separated into two separate phases and how do you time this process.

08:48 S2: [Kim] In an ideal situation, and this is something that doesn’t necessarily relate specifically to the autism domain, you would want a robot to have an idea of who you are as a person, or how you interact, or maybe an idea of humans in general, and then being able through interaction to kind of fine tune the way it interacts with you, so that’s kind of like a general framework of having what we call a Prior on your response to certain actions and then collect data and learn to fine tune what we call the policy of the robot. How the robot behaves or makes decisions. So what we explored so far in our research, fractions is to look at how can we construct this prior first, so how can we have initial information that might be different for different individuals, and one of the things we thought about was to look at In a healthcare context, you do have access to information about a certain patient, so all patients are not the same, you cannot treat all patients the same as you would For example, what you’re developing a technology product and you expect the average user to use it in a certain way, this average patient, doesn’t really exist, it’s really important to know how to maybe categorize or how to understand

10:16 S2: To model patients in a different way, and so if you have access to this health care information, to this, the health record, which then can be a diagnosis, you can utilize that information directly on the robot, say, Okay, given that this is the type of autism that this person has Or the severity of the autism of that person, maybe this is how the robot should model the person, or This is how the robot should behave, another problem is that a lot of times, the way that people respond to humans is not the same as the way they respond to robots, and so what we need to understand is how this model can be So what I would call the human human interaction data transfer or could generalize to human-robot interaction, and that’s a research problem in itself, and this Nothing is not always clear. It might be something, there is a highly individual dependent You might have some individuals that respond in a very similar way to humans and robots, but you might have some kids that or some individuals in general that might be more comfortable with robots than humans, or less comfortable with robots than humans, or afraid of robots, so we really need to understand also this mapping and whether this mapping is useful in a certain situation, so what we do in the studies that we’ve done with kids is we always have the reiterate typical tests that humans, like.

11:47 S2: therapists usually engaged in and comparing this data, so trying to see, Okay, can we use the data that we have from a human interaction directly, or would do we need to collect or do we need to give more importance to the data the robot collected?

12:04 S1: [Lauren] So you have these two ways that the robot collects a prior on these children, one is the medical data that gets input to the system perhaps manually or by a professional, and then you also kind of confirm these priors by having the robot do certain exploratory actions with the children And this is so important because there’s such a difference between children, especially children with autism, such a diverse population that you want to start the interaction with some sort of prior as opposed to starting the same with everyone and building it up over time because you want the beginning of the interaction to be meaningful as well. Not just later on in the interaction Exactly.

12:53 S2: [Kim] Okay, exactly. But actually, actually, it turns out, based on the studies that we run, that we cannot trust the human data as much because exactly that problem, that the way that especially children with autism, like younger children with autism respond to robots can really differ from the human response. So in a way, it’s really needed for robot to do an assessment before starting any kind of interaction, and so the assessment that we do is usually, it calls a typical algorithm that therapist would use to assess, it goes hierarchically through increasingly fronting actions. So increasing the stimulation level and seeing where the kid responds to certain stimulus and using that information to then maybe personalize or adapt based on this information, and so it turns out that we end up in the specific tasks and we’ll look at we ended up not using that human diagnosis information because we thought it was not reliable enough to be used directly, so we have the first phase in which the robot is safely interacting with the child and going through these increasingly stimulating actions until they have a better understanding of how the child responds and once the robot has this understanding, then it uses that information to target specific prompts to personalize basically the interaction.

14:23 S2: So this is kind of like the insight we got from real-world interactions, what we want eventually to develop, and this is research that we’ve been doing is to model these things in a more In a more mathematical kind of way, so being able to quantify these concepts in terms of probabilities, in terms of costs, so what does it mean for an action to be costly, and so we’re using concepts of decision theory or utility theory to be able to give a model of a child, and assuming that we have this perfect model of the child, what should be the sequences of actions that the robot should take to be able to maximize some utility, and in our case, the utility has to do with how much What is the “Just try” challenge for that child, so how do you select your prompt or your actions so that you don’t overly simplify things or make it too easy on the child, but also not making too difficult for a child to not respond and just basically lose the flow of an interaction, so there’s always the trade-off in a naturalistic context when you have a robot interacting with a human.

15:35 S2: And especially in therapy where you’re supposed to challenge the patient to get better over time, so how do you model these things mathematically, and this is kind of the latest research we’ve been doing in that context. Yeah.

15:51 S1: [Lauren] Right. So I’ve heard you mentioned a few different things that you’re trying to optimize, one of which seems to be how much stimulation, perhaps volume or expressiveness of gestures should the robot be providing to the child, because different children with autism have different tolerances or different requirements for how much stimulation is needed in order to have a response. Is that correct?

16:17 S2: [Kim] That’s right, exactly. Yeah, that’s exactly right.

16:19 S1: [Lauren] And then the other thing that you’re trying to optimize as the level of difficulty of the activity that you’re trying to teach, or the skill that you’re trying to teach, and you’re using decision theory to understand what this optimal level is. Can you explain a little bit more about that? How does decision theory work in these different contexts?

16:41 S2: [Kim] Right, so the first model that we came up with is simply to model the level of stimulation or the difficulty of a prompt as basically a probability that a child would respond, so we have this probabilistic framework in which, if you make the prompt easy enough or stimulating enough, then you have a higher Probability of the child responding, so it’s not just like a binary, the child will respond or will not respond, we’re actually looking at quantifying these probabilities so for every action or robotics, we have a measure of what is the probability like out of one hundred trials that the robot would take, how many of those would be successful, so because sometimes a child would understand that and respond, other times that might be other environmental factors that may make the child not respond for whatever reason, and so being able to put a number on those probabilities is something that is crucial in this model, the other thing is putting a number on the costs of such actions. And if you think about the context that in therapy for example, the cost of an action has to do with a therapeutic cost. So if an action is overly stimulating or too easy, that’s a high cost because you’re basically going too far from what a natural interaction would look like in the real world, and you’re not challenging the child enough, you’re making it may be too easy, so you’re not promoting an improvement over time.

18:13 S2: So this is how we this tradeoff. You don’t wanna be spending too much of a cost if you don’t need it, but also you don’t wanna be spending to a little of cost because then you might have to repeat many times, and so you might get a flow of an interaction. So this has to do with, for example, or how much In our case, we’re looking at things like joint attention, so we’re looking at the attention task, but you can think of it, in terms of an educational scenario where you’re tailoring the difficulty of a certain activity or a certain problem, if you make it too difficult on the students, then they might just be discouraged and they might just decide to not continue the interaction, but if you’re making it too easy, that might get a good grade, but you’re not challenging them enough to get better over time, and so we’re trying to model the tradeoff in a mathematical way and have algorithms that would eventually generate up to moral either teaching sequences or in our case, that would be social behaviors on the robot side. Yeah.

19:21 S1: [Lauren] Right, and I’m sure one of the main factors in terms of making this model successful is having a way of evaluating those costs, so how do you measure online, whether there’s a cost, whether there’s a reward and the value that you’re going to assign to those costs and rewards, what are you grabbing from the interaction in order to do that?

19:47 S2: [Kim] So the way that we model this cost is as something that we measure apriori, it is something that we know. So we basically, it is a domain-specific knowledge, the cost, the costs are something that are intrinsic to the actions that the robot is gonna take, so what we do is that we show the robot performing certain actions to experts, and experts will look at the robot and tell us, basically rate the robots behavior. So they will ask things like how natural is the robots behavior or how explicit is robots behavior, those metrics will then be converted into cost functions into actual continuous values on a scale from zero to 1, and so was on the domain knowledge we already know what the costs of the robots, robots actions are what we need to learn on the fly, or what we need to assess is the probabilities of response of a specific child, this is something that various from child to child. So the costs are are on the, what we call the provider side, so on the robot side, because it’s something that’s intrinsic to the actions, but then what varies from child to child is how these actions will create either successes or failures on the receiver side on the child side, and so this is something that we usually

21:08 S2: We’ve collected a bunch of data from children interactions and we’ve run some regression on the data to be able to kind of extrapolate for a given assessment of a child or where in this hierarchy of action of the child actually responds to convert these types of assessment to actual values, probability values. So is that like 80% chance of responding or is that like 20% of responding, and this transition is something that is not straightforward because the way a therapist evaluate kids is not just from probabilities, because those are things that humans wouldn’t be able to assess accurately. What humans do are basically very binary things, so they try things, if it works, they keep a record of that, but then how do you go from that kind of framework to one where you actually quantify things and then use these numbers to be able to then compute plans that are provably optimal for a given model, assuming the model is correct, of course, another different challenge is to actually make sure that this model stays the same over time, and this is a whole other type of research on adaptation and online learning, but for now, we’re trying to understand this kind of first step of giving a model, how do you deal with this model

22:28 S1: [Lauren] Right. What’s the biggest challenge that you guys are facing?

22:34 S2: [Kim] The biggest challenge is to actually make assumptions that are gonna hold true in real world, because you can work in simulation as long as you want, you can assume many things, not just because it’s intuitive, but sometimes looking at literature from other fields, you might expect a child with autism spectrum disorder, to respond to a certain activity in the same way a typically developing child would, or you might think that your results from education might be applied to therapeutic scenarios and in a lot of times these results don’t generalize, and so it’s really challenging to make assumptions that are useful to be able to construct a certain scenario to test your algorithms on and then actually test those assumptions in the real world, and if they fail, to still be able to get something out of your evaluation because the more assumptions you make, the more complex you can have your scenario, but the more assumptions you make, also the more risky it is to engage in such In such studies, and so the dance between theory and practice, I think it’s really, really challenging one, given that testing on special needs populations is something that is really hard to do recruiting the type of children or is really a challenge working with the parents.

24:03 S2: It’s very high stressful. High stress kind of environment. So you really need to be very mindful of how to invest your energy and effort into what you wanna test with real world scenario and what you can assume and test in simulation. And I think that the balance I is quite hard to achieve sometimes.

24:28 S1: [Lauren] I’m sure, yeah, which is why that prior that you discussed before is so important in doing the testing Exactly. Instead of completely relying on the prior Great, well, thank you so much. And something else you mentioned at the beginning is that you’re a dancer, can you tell me a little bit about that and how you balance these two interests that you have, so what type of dance do you do?

24:55 S2: [Kim] So I would say that since high school, I get involved in the science and in the arts at the same time, and those were really two separate interests that have been pursuing They’re actually passions. I would say that they’re actually passions because I wouldn’t consider my dance work as just purely hobby I’m doing it also quite seriously And I never got to really mix my dance background with my science until one project that I did in Lisbon in which there were a bunch of dancers in residence in a lab that were trying to interact with the robot through motion and physical contact. And I thought, this was a great opportunity to join them, they’re trying to see, Okay, what can we do on a technical level to facility this type of interaction? And really, one thing I’ll say that I’ve recently learned in dance that’s drastically helped me think about interaction in robotics is that especially in improvisation, improvisation is all about decision-making it So it’s all about making decisions in real time, in an embodied way, so you are a body in a space or you are a body interacting with another body, and you’re basically a multi agent system that needs to make decisions in your time, and this is what we’re trying to do with the robots.

26:21 S2: So I see a lot of potential in using dance as a test bed for embodied intelligence and mental decision-making, maybe not necessarily for artistic purposes, but also for a couple, because there’s a lot of dance practices that are not just about beauty of the form and aesthetics but actually about really intelligent decision-making with your body in real time, with a lot of inspiration from the martial arts, for example, where you have a clear goal of you want to defend yourself, you want to escape a certain offense from your partner, and so all of this, all of these complex dynamics, I think are really, really interesting to explore from an AI point of view, from an agent, a decision making point of view, and so I see this kind of interaction, maybe future research as a very, very interesting type of domain to it because it encompasses the social dynamics, maybe not verbal communication, but certainly non-verbal communication and coordination, but also this embodied intelligence of actual physical interaction, so we use a lot of physical contact in improvisation, so it’s this type of dance where you have two bodies sharing or more Bodies, sharing weight, and basically, what emerges are those kind of behaviors in which the system as a whole is moving and you’re not

27:57 S2: You’re kind of losing your individuality as an agent, you’re basically through sharing weight and through working with gravity, bodies are evolving, and it’s at the point of contact that moves you, which I think is a very interesting concept, it has been around in multi-agent systems when you talk about emerging behaviors and distributed systems, this is kind of the same thing. I just don’t think that a lot of people have thought of it that way, so I think there’s a lot of things that dance can bring into AI in general, and into embodied intelligence more specifically, so who knows maybe in the future I’ll merge those two things.

28:41 S1: [Lauren] It’s interesting you mentioned that there really is this combination of a physical interaction and a social interaction. I’m thinking of a lift, for example, if you’re willing to lift someone up, you have to both communicate to them that you’re ready and that you’re able to hand all the move in a certain way, but that also you have to apply forces in a certain direction and in a certain way with relation to how the other person is moving, so it’s really kind of this multi modal interaction.

29:12 S2: Yeah, definitely. I think there’s so much social, there’s so much social content, this type of interaction, especially in improv, that people tend to disregard, and sometimes this type of social interaction is extremely subtle, so you’re not explicitly communicating things, but you’re just looking at a corner of your eye where the body of the person is and what their attention cone is, and you’re doing all these inferences, and people that are good at improvising is in dance are not necessarily dancers that aren’t good at performing choreography. It’s a different part of your brain that you’re training, and so I think there’s so much So many layers of intelligence and in this type of dance improvisation, that definitely is maybe more subtle social dynamics, but the social dynamics are definitely there, especially in regards to communication, coordination and working with different social roles, even because you have social structures that start for me. The first one being leader, follower, or no leader, no follower, just working as a team, but also group dynamics and all sorts of other Then you can just escalate into all of the different layers of social interactions that humans have

30:35 S1: [Lauren] Which are also all issues that we’re looking to explore in robotics as well. Can you speak a little bit personally now too, as a PhD student, many of us are of course, struggling as to how to Or trying to figure out how to balance interdisciplinary interests or interest in and out of the lab, whether they’re robotics-related or just personal hobbies, how do you balance these two interests and passions that you have?

31:08 S2: [Kim] Yeah, so I would say it has been hard in the past to do that, especially when you have a schedule of courses that is quite rigid as you evolve through your PhD, I think it’s easier to manage your time the way you want Things become more flexible, you’re able to I am able to go, for example, every day for a training, whether it’s in the morning or in the evening, and I kind of shift my work hours around it, but I think it’s a skill that I kind of developed naturally through all these years in to balance both, and I think as long as you feel the need For me, those two things are very complementary. Having to work your brain so many hours a day, but also having to have something, you know, physical but also something creative that completes or we can complement the other side is something that I’ve always seeked I always felt like if I’m focusing on one of these things, I feel incomplete in some way, and so in a way you find time for it because the well is there. It doesn’t feel like, Oh, I have to go to dance training now.

32:18 S2: I have to fit. Have to go to the lab. I rather dance all day No, I’d rather Maybe not dance Maybe I dance today, but then I research all day tomorrow, but I think it’s something that the duality in my personality is something that kind of drives this naturally, but it definitely needs a lot of time management skills I think that is the key. But I think as long as you are passionate about something, my advice is to not think that if you’re doing a PhD, you’re actually going into in high stress career to say, Oh yeah, I’m gonna sacrifice what I really like. Or my hobby. If you really are passionate about something that’s gonna help you eat your other stuff, definitely, so it’s worth investing time, and if it’s something that drives you and motivate to you, you can always find time for it, definitely.

33:18 S1: [Lauren] Great, well, thank you so much for joining us today and we look into seeing where your research and dance goes.

[33:25] and that’s it for today, but before we go, if you enjoy listening to experts in robotics and asking them questions, we would recommend that you also check out talking robotics, talking robotics is a series of scientific virtual seminars about robotics and its interaction with other relevant fields. The ultimate goal is to promote reflection, dialogue and networking for researchers at any stage of their career, you can join this seminar for the next session on December 11th, where they will be discussing how to conduct remote research for human robot interaction, something that feels really relevant given that we are all at home due to the pandemic, our Robohub podcast will be back in about two weeks time until then. Goodbye. Dance with Robohub, the podcast for news and use on Robotics.All audio interviews are transcribed and edited for clarity with great care, however, we cannot assume responsibility for their accuracy.